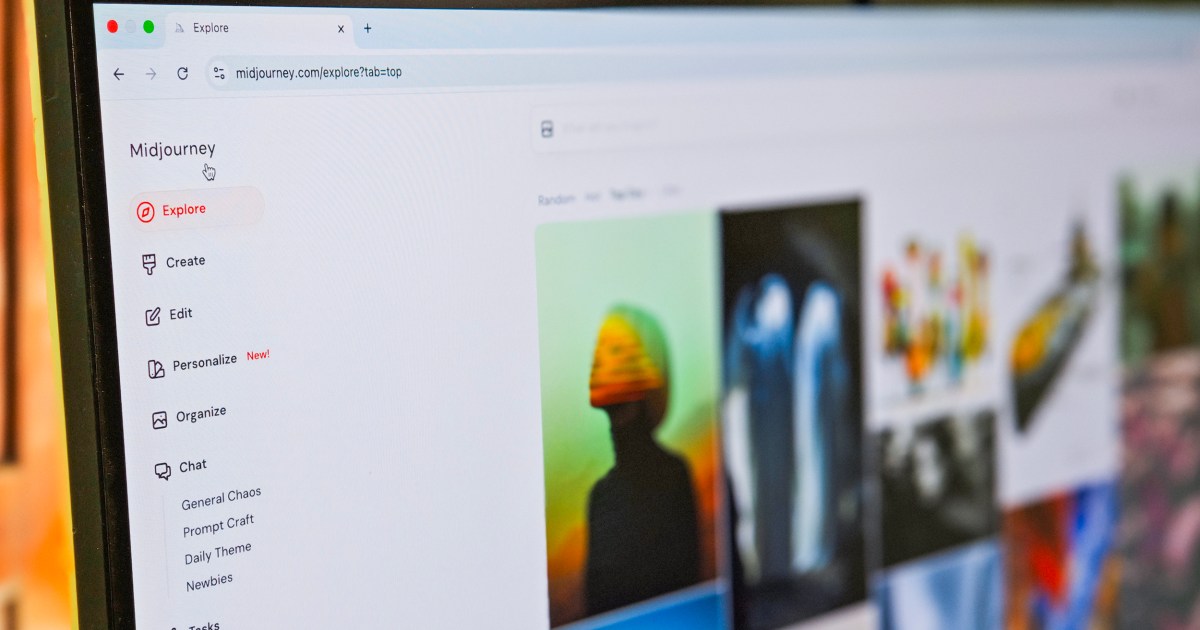

New Image Generation Model from Midjourney Announced to Compete with OpenAI's GPT-4.

MidJourney has developed an artificial intelligence model for image generation that provides faster and more accurate results.

MidJourney, one of the image generation models that promised to be innovative at its inception, has seen its relevance diminish in the face of more accessible and free tools like Gemini, ChatGPT, and Bing. The recent update of OpenAI's GPT-4o model, known for its exceptional ability to generate realistic images and high-quality text, has intensified competition in this field. To reclaim its position in the market and capitalize on the current moment, which has seen a surge in AI-generated art inspired by Studio Ghibli, MidJourney has decided to launch a new version of its model, V7.

David Holz, CEO of MidJourney, shared information about the V7 model on the official Discord server and in a blog. According to Holz, this new model has a better understanding of text instructions and produces images with "notably superior" quality and "beautiful textures." It is also highlighted for its speed, generating images approximately ten times faster than the previous model, thus facilitating brainstorming and frequent iterations.

One of the most innovative features of V7 is "Draft Mode," which halves the cost and increases generation speed, making it ideal for easily iterating over ideas. In this mode, users can verbally explore new ideas, as the Conversational mode transforms into a voice option when using the Discord app, allowing users to "think out loud" while images are smoothly created.

Additionally, the V7 model allows operation in different modes like Relax and Turbo, which offer high-resolution images, although at a higher cost in terms of credits. However, some features like scaling and retexturing still need to use the previous version, V6.1. Customization also reaches V7, allowing users to save their preferences through a simple process that involves selecting from 200 images.

Currently, MidJourney is conducting a community-driven alpha test for the new version, and more features are anticipated in the next 60 days. To access this version, users can change the model settings in the Discord chat or on the web platform.